The AI Deception Crisis: Why You Can't Trust What You See Online Anymore (And What To Do About It)

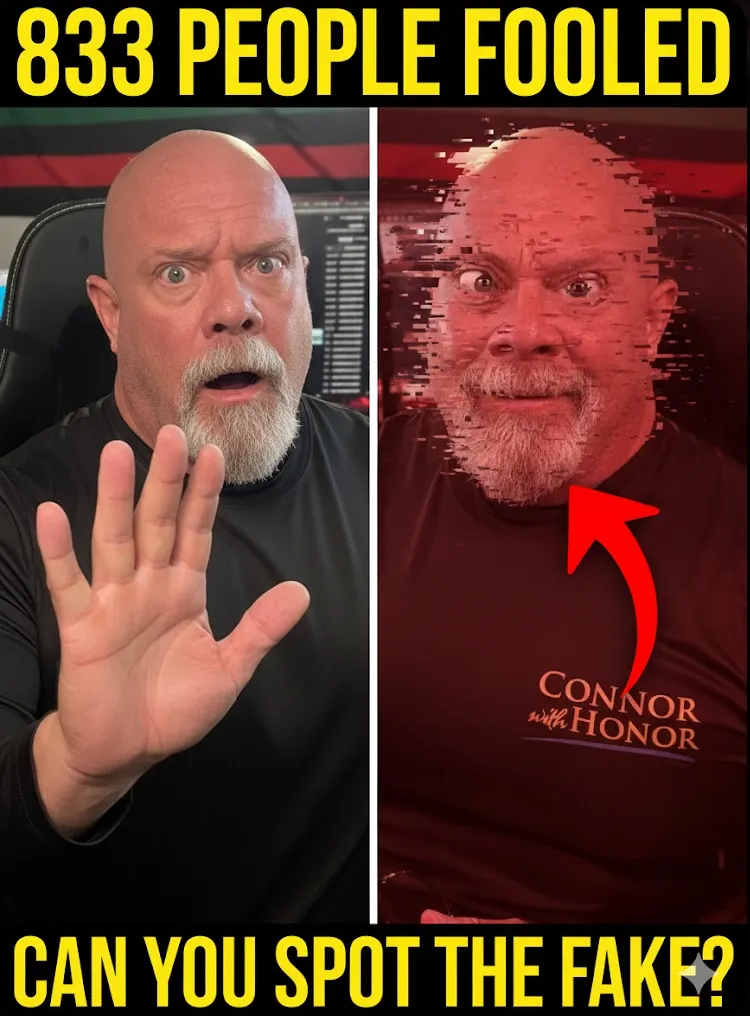

TL;DR: AI-generated fake videos have reached a sophistication level where even 833 engaged viewers failed to identify a completely fabricated Victor David Hanson video. The technology has perfected voice cloning, lip sync, and visual authenticity - only the content gives it away. This isn't about being smart or savvy anymore. It's about systematic verification before you believe, share, or react to anything online. Your kids, your parents, and everyone in your circle needs this reality check before AI slop becomes completely indistinguishable from reality.

The Wake-Up Call: When 833 People Miss the Obvious

I just watched 833 people get completely fooled. Not by a sophisticated political operation. Not by a major media conspiracy. By a fake Victor David Hanson video that was so convincing, not a single commenter in that entire thread identified it as AI-generated content.

Let me be clear about something upfront: I'm not the smartest person in any room. Never have been. But if I can spot this and 833 other people couldn't, we've got a serious problem on our hands.

Here's what made this particular case so disturbing: the AI had everything right. The voice? Perfect. The lip sync? Flawless. The mannerisms? Spot on. The only thing that gave it away was the content itself - the words coming out of "his" mouth weren't Victor David Hanson's style. They weren't his talking points. They weren't his rhetorical patterns.

But nobody caught it. Nobody in that entire comment section said, "Hey, wait a minute, this doesn't seem right."

That should terrify you.

What's This Cat's Deal? The Question You Need to Ask About Every Single Video

Every video you watch online needs to pass through a simple filter: "What's this cat's deal?"

Whether it's YouTube, Instagram, TikTok, Facebook, or any other platform, you need to ask:

What is this person trying to accomplish?

Is this really them or is it somebody else?

Is this AI-generated or not?

What's the purpose behind this content?

Who benefits if I believe this?

This isn't paranoia. This is basic media literacy in 2024 and beyond.

The problem isn't just that AI can create convincing fake videos. The problem is that we've already given AI the entire playbook. We've fed it everything we find interesting, everything that grabs our attention, everything that makes us click, comment, and share.

Companies are making fortunes producing AI-generated videos specifically designed to exploit your psychology. They know what makes you angry. They know what makes you curious. They know what makes you feel validated or outraged or entertained.

And they're using that knowledge to create content that looks real, sounds real, and feels real - but isn't.

The Governor, The President, and Why Truth Still Matters

Look, I don't care if you love the governor or hate the governor. I don't care about your political affiliation with the president. What I care about is that when videos are put out that aren't true, it's a problem for everybody.

Because here's the thing: if you can't trust what you're seeing, you can't make informed decisions. You can't participate meaningfully in democracy. You can't even have honest conversations with your neighbors about what's actually happening in the world.

When AI-generated fake videos of public figures go viral, it doesn't just mislead people about that specific incident. It erodes trust in everything. Pretty soon, people start doubting real videos because they've been burned by fake ones. Or worse, they believe fake videos and dismiss real ones because the fake content aligns better with their existing beliefs.

The verification process matters. The truth matters. And right now, we're losing our collective ability to distinguish between what's real and what's artificially generated slop.

The Technology Has Already Won the Surface Battle

Let's talk about where AI video generation actually stands right now, not where it might be in five years or what the theoretical limits are.

The AI has already mastered:

Voice Cloning: The technology can perfectly replicate anyone's voice with just a few minutes of audio samples. Pitch, tone, rhythm, accent, speech patterns - all of it captured and reproduced with startling accuracy.

Lip Sync: The days of obviously fake lip movements are over. Current AI can match mouth movements to generated speech so precisely that even frame-by-frame analysis won't reveal obvious artifacts.

Facial Expressions: Micro-expressions, emotional responses, natural movement patterns - AI has analyzed millions of hours of real video and can now generate these with frightening authenticity.

Visual Consistency: Lighting, skin texture, environmental context - the technical quality has reached a point where visual inspection alone won't catch most fakes.

Contextual Believability: The AI knows how to frame shots, use appropriate backgrounds, include realistic environmental sounds, and create content that fits within expected formats and styles.

Here's what the AI hasn't quite perfected yet: the subtle patterns of how specific individuals actually communicate. The word choices they make. The logical structures they prefer. The rhetorical devices they consistently use.

That's the gap. That's what gave away the fake Victor David Hanson video. Not the voice. Not the visuals. The words.

But here's the terrifying part: that gap is closing fast.

Why "I Can Always Tell" Is Dangerous Thinking

Some of you reading this are thinking, "Well, I'm pretty savvy about this stuff. I can always identify fake AI videos."

Cool. Good for you. Seriously.

But let me give you a reality check: it's going to get more difficult. Much more difficult. And at some point, even you won't be able to reliably identify AI slop.

This isn't a challenge to your intelligence or your media literacy skills. This is simple mathematics. As AI systems process more data, analyze more patterns, and refine their models, the gap between real and fake continues to shrink.

The Victor David Hanson example proves this point perfectly. These weren't stupid people in that comment section. These weren't unsophisticated consumers of media. These were people engaged enough to watch a video about complex political topics and motivated enough to leave comments.

And all 833 of them missed it.

If you think you're immune to this because you're smart or experienced or skeptical, you're exactly the kind of person who's most vulnerable. Because you've stopped verifying. You've stopped asking questions. You've started trusting your gut instead of doing the work.

The Money Behind the Manipulation

Let's talk about why this is happening at scale.

These AI-generated videos aren't being created by bored teenagers or political activists working in isolation. They're being produced by companies that have identified a profitable business model:

Generate content that exploits psychological triggers

Get massive engagement (views, comments, shares)

Build channels to mega-star status quickly

Bank advertising revenue and sponsorship deals

Scale up and repeat

The economics are compelling. Why hire talent, produce legitimate content, and slowly build an audience when you can use AI to create dozens of videos per day that are specifically engineered to go viral?

The platforms themselves have limited incentive to crack down aggressively. Engagement is engagement. Views are views. Ad revenue is ad revenue. Whether the content is real or AI-generated doesn't fundamentally change the business model from their perspective.

This creates a race to the bottom where authentic content creators are competing against entities that can produce unlimited amounts of optimized content with minimal investment.

What You Need to Tell Your Kids (And Your Parents)

This isn't just about you being careful. This is about everyone in your periphery understanding the new reality of online content.

For Your Kids:

Your kids are growing up in a world where they can't trust their eyes and ears. They need to understand that videos aren't evidence anymore. They need to develop systematic verification habits before they encounter content designed to manipulate them.

Talk to them about:

Not sharing content immediately after seeing it

Checking multiple sources before believing claims

Understanding that emotional reactions are being engineered

Recognizing when content is designed to make them angry or scared or excited

The difference between healthy skepticism and conspiracy thinking

For Your Parents:

If your parents didn't grow up with digital media, they're even more vulnerable. They often have strong pattern recognition for traditional forms of deception but lack the framework for understanding AI-generated content.

Help them understand:

That people who look and sound exactly like trusted figures might not actually be those people

The importance of going directly to official sources rather than relying on shared videos

How to check URLs and verify website authenticity

That if something seems too shocking or convenient, it probably needs verification

That it's okay to wait before forming an opinion or sharing content

For Everyone Else:

Everyone in your circle needs to hear this message: we're in a new phase of information warfare, and the weapons have gotten significantly more sophisticated.

The Wait-and-Verify Protocol

Here's a simple protocol that will save you from most AI-generated content traps:

When you see something shocking, controversial, or perfectly aligned with your existing beliefs:

Step 1: Take a breath. Don't react immediately. The content was likely designed to trigger an immediate emotional response.

Step 2: Wait 5-10 minutes. If it's real and important, it won't disappear.

Step 3: Check multiple mainstream sources. If something significant happened, multiple news organizations will be covering it.

Step 4: Look for official statements. Go directly to verified social media accounts or official websites.

Step 5: Examine the original source. Who posted it? What's their track record? What's their motivation?

Step 6: If it's only showing up in one place or on channels you've never heard of, treat it as unverified.

The Truth Emerges Rule: Give it a few minutes and the truth will come out. If a few minutes goes by and you're not seeing verification anywhere else, then either it's a major media governmental conspiracy (unlikely) or it's not actually true (much more likely).

This isn't about being overly skeptical or paranoid. This is about being appropriately cautious in an environment where the technology to deceive has massively outpaced our collective ability to detect deception.

The "Assassinated on Live TV" Test

Here's an extreme example that illustrates the problem: if you see somebody shot on live TV or somebody claiming a public figure was assassinated, you need to run around and check a little bit before accepting it as truth.

That should sound absurd. That should sound like paranoia. But we're rapidly approaching a point where it's simply prudent skepticism.

Because the technology exists right now to create completely convincing footage of events that never happened. And as that technology continues to improve, the burden of verification shifts from "is there evidence this is fake?" to "is there evidence this is real?"

Don't knee-jerk into doing something you're going to regret. Don't share content that could cause panic or violence or massive disruption without being absolutely certain it's legitimate.

Give it a few minutes. Check multiple sources. Verify through official channels. Then decide whether to engage with or share the content.

The Content Pattern Recognition Approach

Since visual and audio analysis are becoming increasingly unreliable, we need to shift our focus to content patterns. This is what caught the fake Victor David Hanson video for me.

Learn to recognize:

Speaking Patterns: How does this person typically structure their arguments? What rhetorical devices do they use consistently? What's their vocabulary level and word choice patterns?

Topic Focus: What subjects does this person actually talk about? What issues do they consistently return to? What topics are they unlikely to address?

Logical Structure: How does this person build arguments? Do they use specific frameworks or approaches? What's their pattern of evidence presentation?

Ideological Consistency: Not that people can't change their minds, but dramatic ideological shifts in a single video should raise red flags.

Contextual Plausibility: Where would this person actually record a video? What format would they use? What platform would they choose?

This requires more work than just watching a video and accepting it at face value. But that's exactly the point. Easy consumption of content is what makes us vulnerable to AI-generated deception.

The Local Santa Clarita Angle

Here in Santa Clarita Valley, we're not immune to this problem. In fact, our tight-knit community makes us potentially more vulnerable to locally-targeted AI-generated content.

Imagine AI-generated videos that appear to show:

Local politicians making controversial statements

Local business owners involved in scandals

Community leaders endorsing specific initiatives or candidates

Local news figures reporting false information

The smaller the community, the more damage a convincing fake video can do. Because we trust local sources more. We're more likely to believe content about people and institutions we feel connected to.

This makes verification even more critical at the local level. When you see content about local figures or local issues, take the extra step to verify directly with those sources before sharing or reacting.

The AI Arms Race Nobody's Winning

Here's the uncomfortable truth: we're in an arms race between AI generation and AI detection, and detection is losing badly.

Every advancement in detection technology teaches the generation systems what patterns to avoid. Every new detection method becomes training data for creating more convincing fakes.

Companies are developing "deepfake detection" tools, but they're fundamentally playing defense against an offense that has unlimited resources and improving capabilities.

The long-term solution isn't going to be technological. It's going to be social and cultural. We need to build robust verification habits at a societal level. We need to create social norms around not sharing unverified content. We need to establish institutional mechanisms for rapid fact-checking and authentication.

But in the meantime, individuals need to protect themselves and their networks by developing better media literacy and verification protocols.

The Platform Responsibility Question

Should platforms be doing more to identify and label AI-generated content? Absolutely.

Are they going to? That's a much more complicated question.

Platforms face several challenges:

The technology to detect AI-generated content is imperfect and often lags behind generation capabilities

False positives would penalize legitimate content creators

Aggressive detection and removal could drive users to platforms with fewer restrictions

The sheer volume of content makes comprehensive screening nearly impossible

Many platforms profit from engagement regardless of content authenticity

This means you can't rely on platforms to protect you. The blue checkmark doesn't guarantee authenticity. The "verified" label doesn't mean the content is real. Platform warnings about manipulated media are often applied inconsistently.

You are your own first and best line of defense against AI-generated deception.

What Happens When Nothing Can Be Verified?

The worst-case scenario isn't just that we get fooled by fake videos. It's that we lose the ability to establish shared truth entirely.

When everything could be fake, nothing can be definitively proven to be real. When AI-generated content is indistinguishable from authentic content, how do we resolve disputes about what actually happened?

This creates an environment where:

People only believe what aligns with their existing views

Evidence becomes meaningless in debates and disputes

Those with resources to generate convincing content control narratives

Trust in all institutions and media continues to erode

Conspiracy theories become impossible to definitively refute

Actual scandals and wrongdoing can be dismissed as "deepfakes"

We're not quite there yet. But we're heading in that direction rapidly. And the time to build defenses is now, while we still have some ability to distinguish real from fake.

The Individual Action Plan

So what do you actually do about this? Here's your practical action plan:

Daily Habits:

Ask "what's this cat's deal?" about every video

Wait before sharing controversial content

Check multiple sources before believing claims

Go directly to official sources when possible

Don't trust emotional reactions as evidence of truth

Technical Steps:

Follow verified official accounts directly

Bookmark legitimate news sources

Use reverse image search for suspicious content

Check URLs carefully before clicking

Enable two-factor authentication on your accounts

Social Responsibility:

Don't share unverified content

Correct misinformation in your networks

Teach these protocols to others

Model good verification behavior

Admit when you've shared something that turned out to be false

Mental Framework:

Understand that your emotional reactions are being engineered

Recognize that sophisticated technology is working against your perception

Accept that you will sometimes be fooled and that's okay

Prioritize accuracy over speed in your information consumption

Build in delay between consumption and reaction

The Educational Imperative

If you take nothing else from this article, understand this: education about AI-generated content needs to happen now, at every level, for every age group.

Schools need to be teaching media literacy that specifically addresses AI-generated content. Not as a unit in computer class. As a core competency that gets addressed across all subjects.

Workplaces need training on identifying and responding to AI-generated content, particularly in industries where misinformation could have serious consequences.

Community organizations need to host workshops and discussions about these issues, particularly for populations that might be more vulnerable to AI-generated deception.

And individuals need to take responsibility for educating themselves and their networks about these threats.

The Santa Clarita AI Perspective

As someone who works in AI integration and automation here in Santa Clarita Valley, I can tell you that the technology itself isn't the enemy. AI has enormous potential to improve efficiency, enhance creativity, and solve complex problems.

But like any powerful technology, it can be used for good or harm. And right now, we're seeing a massive wave of harmful applications that exploit the gap between what AI can create and what people can detect.

My work with HonorElevate and Santa Clarita Artificial Intelligence focuses on legitimate business applications - automation that adds value, voice agents that improve customer service, systems that enhance productivity. That's the positive potential of AI.

But I also have a responsibility to educate my community about the risks. About the deceptive applications. About the need for verification and skepticism.

That's why I'm recording videos like this. That's why I'm having these conversations. Because the technology is moving faster than public awareness, and that gap is dangerous.

The Personal Commitment to Truth

Here's my personal commitment: I will continue calling out AI slop when I see it. I will continue educating about verification protocols. I will continue demonstrating what responsible AI use looks like.

But I also need you to make a commitment:

Commit to being the person in your network who doesn't just share content without verification. Be the one who asks questions. Be the one who waits for confirmation. Be the one who admits when they got something wrong.

It's not glamorous. It's not going to make you popular in the moment. But it's going to protect you and your network from being manipulated by increasingly sophisticated AI-generated content.

Looking Forward: The Next Six Months

AI generation capabilities are advancing rapidly. In the next six months, we're likely to see:

Even more convincing voice cloning

Better integration of contextual details

More sophisticated mimicking of individual communication patterns

Increased volume of AI-generated content across all platforms

New techniques that defeat current detection methods

This isn't speculation. This is based on current development trajectories and known capabilities in the pipeline.

Which means the window for building robust verification habits is closing. The harder it becomes to detect AI-generated content, the more critical systematic verification becomes.

Start now. Build the habits now. Educate your network now. Before AI slop becomes completely indistinguishable from reality.

The Bottom Line

Every video you see online needs to pass through your personal verification filter. Every single one. Even this one.

Ask what the creator's deal is. Ask what they're trying to accomplish. Ask if the content matches what you know about that person or topic. Ask whether the claim is being verified by reliable sources.

Don't trust your eyes. Don't trust your ears. Don't trust your gut reaction.

Trust your verification process.

Because right now, 833 people got fooled by a fake Victor David Hanson video. And if they can be fooled, so can you. So can I. So can everyone.

The only defense is systematic skepticism combined with active verification. It's more work. It's less convenient. It's not as satisfying as immediate reaction and sharing.

But it's the only way to maintain some connection to truth in an age of increasingly sophisticated AI-generated deception.

Be wary. Be skeptical. Be systematic in your verification. And help others do the same.

Because it's only going to get harder from here.

FAQ: AI-Generated Fake Videos and Media Literacy

Q: How can I tell if a video is AI-generated?

A: Currently, your best approach is analyzing content patterns rather than visual or audio quality. Look at whether the words, arguments, and communication style match what you know about that person. Check if the claims are being verified by multiple reliable sources. Use reverse image search on screenshots from the video. But honestly, detection is becoming increasingly difficult as the technology improves.

Q: Are there tools that can detect deepfake videos?

A: Several deepfake detection tools exist, but they're in an arms race with generation technology. Detection tools can help but shouldn't be your only line of defense. They often produce false positives and false negatives. Your best protection is systematic verification through multiple sources rather than relying on a single detection tool.

Q: What makes the Victor David Hanson fake video example so significant?

A: It demonstrated that even engaged, thoughtful viewers can be completely fooled by current AI generation technology. The fact that 833 people commented without anyone identifying it as AI-generated shows how sophisticated these fakes have become. The only giveaway was the content style, not the technical execution.

Q: Should I assume all videos online are fake until proven real?

A: Not quite that extreme, but you should assume all videos need verification before you share them or make decisions based on them. Start with healthy skepticism, especially for content that triggers strong emotions or perfectly aligns with your existing beliefs. The verification burden is now on the consumer, not the creator.

Q: How do I explain this to my elderly parents who aren't tech-savvy?

A: Focus on simple principles: Don't share things immediately after seeing them. Check with multiple news sources before believing big claims. Remember that videos aren't proof anymore. If something seems shocking, wait and see if other places are reporting it. Encourage them to call you before sharing anything controversial or surprising.

Q: What about live streams? Can those be faked in real-time?

A: Real-time deepfake technology exists but is currently less sophisticated than pre-recorded fakes. However, this is advancing rapidly. For truly critical situations, verify through multiple independent live sources and official channels rather than relying on a single stream.

Q: Are AI-generated videos illegal?

A: Laws vary by jurisdiction and context. Creating and sharing deepfakes with intent to defraud or harm is illegal in many places. Political deepfakes around elections may have specific regulations. But the legal framework is still developing and enforcement is challenging. Don't rely on legal consequences to prevent creation - focus on your own verification practices.

Q: How do platforms like YouTube and Facebook handle AI-generated content?

A: Platform policies vary and are evolving. Some require disclosure of synthetic media, but enforcement is inconsistent. Platforms struggle with the volume of content and the difficulty of detection. Don't rely on platforms to label or remove AI-generated content - many fakes circulate without any warning labels.

Q: What's the difference between AI-generated content and edited footage?

A: Traditional editing manipulates real footage - cutting, rearranging, or altering existing content. AI generation creates entirely new footage that never happened, synthesizing video, audio, and movement from scratch. Both can mislead, but AI generation is more difficult to detect because it doesn't rely on actual source footage.

Q: Can AI generate videos of me if someone has photos and videos of me online?

A: Yes. Current technology can create convincing deepfakes from relatively small amounts of source material available on social media. This is why privacy settings and limiting public video/photo content matters more than ever.

Q: Should I delete all my social media posts to prevent AI training on my data?

A: That ship has sailed for most people - the data has already been harvested. Focus instead on limiting future exposure, using privacy settings, being mindful of what you share, and building verification habits within your network so they'll question suspicious content allegedly from you.

Q: What role do AI voice agents play in this problem?

A: AI voice agents (like those I build through BusinessAIvoice.com) are legitimate business tools for customer service and engagement. The technology itself is neutral. Voice cloning becomes problematic when used deceptively to impersonate someone without disclosure. Legitimate applications disclose their AI nature; malicious applications conceal it.

Q: How can journalists and news organizations maintain credibility in this environment?

A: Robust verification processes, transparent sourcing, clear labeling of any synthetic media, quick corrections when errors occur, and building institutional trust through consistent accuracy. News organizations need to invest heavily in verification infrastructure and teach audiences how their processes work.

Q: What happens if someone creates a deepfake of me?

A: Document it immediately. Report it to the platform. Contact law enforcement if it's harassing or defamatory. Notify your network that the content is fake. Consider consulting with a lawyer about options. In some jurisdictions, you may have legal recourse, but prevention through limiting available source material is easier than removal after the fact.

Q: Are there watermarking or authentication methods being developed?

A: Several authentication methods are being explored: blockchain verification, content credentials, cryptographic signing, and metadata standards. But these only work if widely adopted and if viewers actually check them. They're part of the solution but not a complete answer.

Q: How do I teach my kids to navigate this without making them paranoid?

A: Frame it as critical thinking, not paranoia. Teach them to ask questions about all content: Who made this? What's their goal? How can we verify this? Make it a game or puzzle to solve rather than a threat to fear. Model good verification behavior in your own media consumption.

Q: What's the worst-case scenario if this problem isn't addressed?

A: Complete erosion of shared truth and evidence-based discourse. A society where people only believe information that confirms existing beliefs because nothing can be definitively verified. Increased political and social polarization. Loss of trust in all institutions. Inability to hold wrongdoers accountable because all evidence can be dismissed as "deepfake."

Q: Can AI detection ever catch up with AI generation?

A: Unlikely in a definitive way. It's an asymmetric problem - generation only needs to fool detection once; detection needs to catch everything. Each improvement in detection becomes training data for generation. The long-term solution must be social and procedural, not purely technological.

Q: What's the first thing I should do after reading this article?

A: Talk to your kids and your parents about verification protocols. Set up a family or friend group system for fact-checking suspicious content before sharing. Bookmark reliable news sources. Commit to waiting before sharing anything shocking or controversial. Start building the habit of asking "what's this cat's deal?" about everything you watch online.

Summary: The New Reality of AI-Generated Content

We are living through a fundamental shift in the nature of online content. AI generation technology has reached a sophistication level where visual and audio analysis alone cannot reliably distinguish real from fake. The fake Victor David Hanson video that fooled 833 engaged viewers demonstrates this reality clearly.

The technology has perfected voice cloning, lip sync, facial expressions, and visual consistency. What hasn't been perfected yet - but is rapidly improving - is the subtle mimicking of individual communication patterns and content style. This narrow detection window is closing fast.

The solution isn't technological detection tools, which will always lag behind generation capabilities. The solution is systematic verification habits:

Ask "what's this cat's deal?" about every video

Wait 5-10 minutes before reacting or sharing

Check multiple mainstream sources

Verify through official channels

Examine content patterns, not just technical quality

Teach these protocols to everyone in your network

This affects everyone: kids growing up with AI-generated content, parents from pre-digital generations, professionals who make decisions based on information, and citizens trying to participate in democratic processes.

The stakes are high. When we lose the ability to establish shared truth, we lose the foundation for productive discourse, accountability, and collective decision-making.

Companies are profiting from creating AI-generated content designed to exploit psychological triggers and maximize engagement. Platforms have limited incentive to aggressively police this content. The responsibility falls on individual consumers to develop robust verification practices.

The situation will get worse before it gets better. AI generation capabilities are advancing faster than public awareness or institutional responses. The time to build defensive habits is now, while there's still some ability to distinguish real from fake.

Every video requires verification. Even this one. Especially this one.

Because if 833 people can miss an obvious fake, none of us are as immune as we think we are.

Related Resources from Santa Clarita Artificial Intelligence

About Connor MacIvor

Connor MacIvor is an AI Growth Architect and founder of HonorElevate, providing AI automation services to businesses in Santa Clarita Valley. With 25+ years of real estate experience and 20+ years of law enforcement background with LAPD, Connor brings unique perspectives on verification, trust-building, and character assessment to the AI integration space. He conducts weekly Monday 10am AI training webinars and creates educational content about both the opportunities and risks of artificial intelligence technology.

For AI automation services, visit HonorElevate.com For AI voice agent solutions, visit BusinessAIvoice.com For real estate referrals, visit SantaClaritaOpenHouses.com