Google's Complete AI Stack: Why They Might Actually Pull This Off

Google's Complete AI Stack: Why They Might Actually Pull This Off

The Vertical Integration Advantage That Could Define the Future of Artificial Intelligence

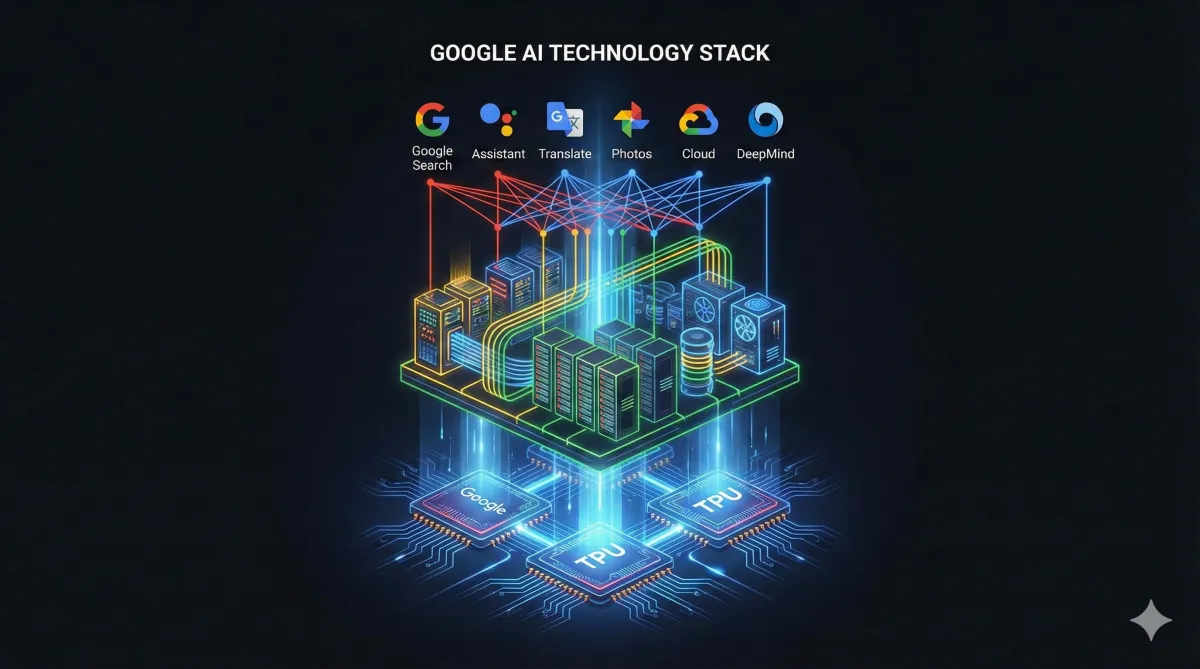

The artificial intelligence race has never been more intense, and while everyone's been focused on the flashy announcements and benchmark wars, something far more fundamental has been taking shape. Google isn't just competing in AI—they're rewriting the rules of the game entirely. And here's why they might actually pull this off: they own the entire stack, from silicon to software, in a way that no other company currently does.

The Infrastructure Advantage: Owning It All

When we talk about Google's position in the AI landscape, we need to understand what "complete stack" really means. This isn't just about having good software engineers or a lot of data. This is about controlling every single layer of the technology pyramid that makes modern AI possible.

At the very foundation, Google has something most AI companies can only dream about: their own custom silicon. The TPU (Tensor Processing Unit) architecture represents years of investment and iteration specifically designed for machine learning workloads. These aren't general-purpose chips adapted for AI—these are AI-first processors built from the ground up to handle the massive computational demands of training and running large language models.

The significance of this cannot be overstated. While competitors like OpenAI must rely on NVIDIA's GPUs or other third-party hardware, Google can optimize every aspect of their chip design for their specific models and architectures. They can make trade-offs that favor their exact use cases. They can innovate at the hardware level in ways that are simply impossible when you're buying off-the-shelf components.

But it doesn't stop at the chips. Google also controls the data center architecture, the networking infrastructure, the cooling systems, and the power management. They've spent decades building out one of the most sophisticated cloud infrastructures on the planet, and now all of that expertise is being brought to bear on the AI problem.

This vertical integration means that when Google engineers are optimizing their models, they're not hitting artificial boundaries imposed by someone else's hardware decisions. They can co-design the software and hardware together, creating efficiencies that horizontal players simply cannot achieve.

The Gemini Moment: When Everything Changed

The release of Gemini 1.5 and subsequent iterations marked a watershed moment in the AI industry. The performance metrics weren't just good—they were shocking. People who had been paying close attention to the space were genuinely flabbergasted by what Google had achieved.

There were even rumors of "code red" situations at competing companies. Whether or not those rumors were true, the sentiment behind them was real: Google had just demonstrated that they could compete at the highest levels of AI performance, and they did it using their own infrastructure.

But here's what makes this even more interesting: Google trained their most recent models entirely on their own TPU chips. This wasn't a hybrid approach. This wasn't using NVIDIA for some parts and custom silicon for others. This was a complete, end-to-end training run on Google's own hardware.

The implications are enormous. It means Google has proven that their chip architecture is capable of producing state-of-the-art results. It means they're not dependent on external suppliers for their most critical resource. And it means they have room to iterate and improve in ways that companies dependent on third-party hardware simply don't.

Anthropic's Strategic Bet: Validation from the Outside

One of the most telling signs of Google's infrastructure advantage is who else is choosing to use it. Anthropic, the AI safety-focused company founded by former OpenAI researchers, has made a strategic decision to train their models on Google's TPU infrastructure.

This is significant for several reasons. First, Anthropic is backed by serious AI expertise—these aren't people making uninformed decisions about infrastructure. They've seen what's out there, they understand the trade-offs, and they've chosen Google's chips.

Second, Anthropic has its own distinct approach to AI development, with a heavy focus on constitutional AI and safety. The fact that Google's infrastructure can support these varied approaches demonstrates the flexibility and power of the platform.

Third, by offering their chips "for lease" rather than just for internal use, Google is building an ecosystem. They're creating a situation where other companies become invested in the success of Google's hardware architecture, which in turn drives more innovation and optimization.

This isn't just Google betting on themselves—this is other serious players in the AI space validating that bet.

The Microsoft-OpenAI Conundrum: A Different Model

To understand why Google's position is so strong, it helps to look at the contrast with their primary competitor: the Microsoft-OpenAI partnership.

Microsoft and OpenAI have a complex relationship. Microsoft has invested billions, and they have deep integration of OpenAI's technology into their products. But they're still fundamentally separate companies with separate interests. OpenAI doesn't own Microsoft's infrastructure, and Microsoft doesn't control OpenAI's research direction.

This creates friction points that don't exist within Google. Decision-making is more complex. Resource allocation requires negotiation. Long-term strategic planning has to account for the possibility that the partnership might evolve or even dissolve.

Meanwhile, OpenAI is still dependent on NVIDIA's hardware for most of their training runs. They're subject to the same supply constraints, the same pricing pressures, and the same architectural limitations as everyone else in the industry who's buying GPUs.

This doesn't mean Microsoft and OpenAI can't compete—they clearly can and do. But it does mean they're playing a fundamentally different game than Google is. They're assembling best-of-breed components and trying to integrate them effectively. Google is building a unified system from the ground up.

The Training Run Reality Check

One of the most interesting revelations to come out of recent industry analysis is the reality of training runs. According to informed observers, OpenAI hadn't done a full retraining of their models between certain versions—they'd been making improvements and optimizations, but not complete rebuilds with updated architectures and expanded data.

Then came GPT-4o, which did represent a more comprehensive training effort. But by that time, Google had already demonstrated what was possible with their Gemini training runs on TPU infrastructure.

This highlights another advantage of owning your own chip architecture: you can iterate on a schedule that makes sense for your research goals, not on a schedule dictated by hardware availability or procurement cycles. When Google's researchers decide they want to do a training run with a new architecture or approach, they don't have to get in line for GPU allocation or negotiate contracts with cloud providers. They can just... do it.

This kind of organizational agility might not seem like much, but in a field moving as fast as AI, the ability to execute quickly on new ideas can be the difference between leading and following.

The Scalability Question: Is Bigger Always Better?

As the AI industry has matured, one of the dominant narratives has been about scale. More parameters, more training data, more compute—these have been the primary levers for improving model performance.

But there are growing questions about whether this approach can continue indefinitely. Are we going to see continued breakthroughs simply by building bigger data centers and running longer training jobs? Or are we approaching some kind of limit where additional scale produces diminishing returns?

This is where Google's complete stack advantage becomes even more critical. If the path forward requires new chip architectures optimized for different training paradigms, Google is positioned to explore that. If it requires tighter integration between model architecture and hardware design, Google can do that. If it means rethinking how data centers are structured to support new approaches to distributed training, Google has the capability.

Companies that are dependent on external hardware providers will be constrained by what those providers choose to offer. Google can chart their own course.

The Path We Should Be Taking: Narrow Alignment First

Here's where we need to pump the brakes a bit and think about what we actually want from AI technology. The race toward AGI (Artificial General Intelligence) and potentially ASI (Artificial Superintelligence) is exciting from a technological standpoint, but it also carries significant risks.

What if, instead of racing toward general intelligence, we focused first on narrowly aligned models—AI systems designed to excel at specific, beneficial tasks?

Imagine having access to an AI specifically optimized for cancer research, capable of analyzing protein structures and suggesting novel treatment approaches at superhuman speed. Or a model focused entirely on solving infectious disease challenges, trained on every relevant paper and dataset, with architecture specifically designed for that domain.

What about AI systems dedicated to extending human longevity, analyzing the complex interplay of factors that affect aging and health? Or models focused on making financial markets more efficient and accessible, helping individuals make better investment decisions?

These narrowly focused models could deliver enormous value while potentially avoiding some of the risks associated with broadly capable general intelligence. They could be easier to align with human values because their scope is limited and their objectives are clear.

The beautiful thing is that Google's infrastructure advantage applies just as much to these narrow models as it does to general-purpose systems. In fact, custom hardware might be even more valuable when you can optimize for a specific domain rather than trying to be all things to all problems.

The Open Model Wild Card: Democratizing AI

While the big players duke it out with proprietary systems, there's another force reshaping the landscape: open-source AI models. These freely available architectures are creating real stress for the "pay-to-play" providers because they're proving that high-quality AI doesn't have to be locked behind expensive API calls.

The open-source movement in AI is doing what open source has always done: it's democratizing access and accelerating innovation through collaborative development. When a model is open, thousands of researchers and developers can experiment with it, optimize it, and find novel applications that the original creators never imagined.

This creates an interesting dynamic for Google. On one hand, they benefit from the open-source ecosystem—they can learn from public research and incorporate the best ideas. On the other hand, they need to demonstrate enough value in their proprietary offerings to justify their infrastructure investments.

But here's the thing: the companies best positioned to thrive in an open-source-heavy world are those with infrastructure advantages. Running models at scale, even open-source ones, requires serious compute resources. Google can offer that compute, trained on their custom silicon, potentially more efficiently than anyone else.

The China Factor: Innovation Under Constraint

We can't have an honest conversation about the future of AI without addressing what's happening in China. When DeepSeek released their models, it sent shockwaves through the industry. Here was a Chinese company producing competitive AI results despite being cut off from access to the most advanced chips.

This is a perfect example of necessity driving innovation. When you can't rely on having the best hardware, you have to get more creative with your architecture, your training approaches, and your optimization techniques. Chinese researchers and engineers have been forced to do more with less, and in some cases, they've found genuinely novel approaches that work better than brute-force scaling.

There's a broader lesson here about the AI race. It's not just about who has the biggest data centers or the most advanced chips. It's also about creativity, resourcefulness, and the ability to find efficiencies that others have overlooked.

This is where cultural factors come into play. In the United States, we tend to celebrate entertainment figures—actors, musicians, athletes. In China, there's a different cultural emphasis on scientists and engineers. Neither approach is inherently better or worse, but they do create different incentive structures and attract talent toward different pursuits.

The question isn't which culture is "right"—it's what happens when these different approaches collide in the race toward advanced AI. My bet is that we'll see innovations from multiple directions, with different countries and companies excelling at different aspects of the technology.

The Content Creation Revolution: Everyone's a Creator

Looking further ahead, we're approaching a point where AI will enable anyone to create professional-quality content. Want to make your own TV show? That's coming. Want to produce a feature film? That'll be possible too. Want to generate a complete podcast series or write and illustrate a graphic novel? AI will make all of that accessible to individuals.

This democratization of content creation could be one of the most transformative applications of AI technology. It breaks down the barriers between having an idea and producing a polished final product. It means that creative expression is no longer limited by technical skill or access to expensive production resources.

But it also raises interesting questions about curation, quality, and what happens when everyone can produce infinite amounts of content. We'll need new systems for discovery, new ways of filtering signal from noise, and new approaches to monetization that work in a world of abundance rather than scarcity.

Why Google Might Actually Pull This Off

So let's bring this all together. Why might Google be the company that ultimately wins the AI race—or at least establishes themselves as the clear leader?

Complete Vertical Integration: They own every layer of the stack, from silicon to software, allowing for optimizations that competitors simply cannot match.

Proven Performance: Gemini's results have demonstrated that their infrastructure can produce state-of-the-art models. This isn't theoretical—it's been proven in practice.

External Validation: Serious players like Anthropic are choosing to build on Google's infrastructure, validating the technical approach.

Iteration Speed: Owning their own chips means they can move at their own pace, not waiting for external hardware providers to catch up.

Economic Model: By offering their TPUs for lease, they're building an ecosystem that increases the value of their infrastructure investments.

Scale and Resources: Google has the financial resources and existing infrastructure to make massive bets on AI without it being an existential risk.

Data Advantage: Let's not forget that Google sits on one of the world's largest collections of human-generated data, which remains crucial for training advanced AI systems.

Institutional Knowledge: Decades of experience building and operating massive distributed systems gives them deep expertise in exactly the kind of engineering challenges that AI training presents.

The Road Ahead: Safety, Alignment, and Access

As we look toward the future of AI, there are some principles that should guide our development:

Safety First: Before we race toward AGI, we should ensure we have robust safety measures in place. The potential downside of getting this wrong is too severe to ignore.

Narrow Before General: Let's prove we can build and align narrowly focused AI systems before we attempt to create generally intelligent ones.

Democratic Access: The benefits of AI shouldn't accrue only to a small elite. We need to ensure broad access to these tools, even if that means prioritizing slightly less capable systems that can be widely deployed over cutting-edge systems restricted to a few.

Transparency and Openness: The open-source movement in AI is healthy and should be encouraged, even by companies with proprietary offerings.

International Cooperation: AI development is happening globally, and we need frameworks for cooperation and safety that cross national boundaries.

Google's infrastructure advantage puts them in a strong position to lead on all of these fronts. They have the resources to invest in safety research. They have the capacity to build both narrow and general systems. They have the scale to offer broad access. And they have the global presence to participate in international discussions about AI governance.

The Bottom Line

The AI race is far from over, and predicting the future in such a rapidly evolving field is always risky. But if you're looking at the fundamentals—who controls the most critical resources, who has the most complete technology stack, who has proven they can deliver results—Google is in an extraordinarily strong position.

They've built an end-to-end system that doesn't depend on external suppliers for critical components. They've demonstrated that this system can produce world-class results. They've attracted external validation from serious players in the AI space. And they have the resources and expertise to continue iterating and improving.

Does this mean Google has already won? No. Competition from OpenAI, Anthropic, Chinese researchers, and the open-source community will continue to drive innovation in unexpected directions. But it does mean that Google has built a foundation that will be very hard for competitors to match.

They're not just playing the AI game—they've built their own playing field, and they own all the equipment. That's a powerful position to be in.

For those of us watching this space, whether as developers, investors, or simply curious observers, Google's complete stack strategy offers a glimpse into how the AI industry might evolve. The winners won't necessarily be those with the best algorithms or the most data—they'll be those with the most complete, vertically integrated systems that can iterate and improve faster than anyone else.

Google might actually pull this off. And if they do, it won't be because of any single brilliant breakthrough. It'll be because they built the infrastructure that makes continuous breakthroughs possible.

The future of AI is being built right now, one chip, one training run, one model at a time. And Google has positioned themselves to be at the center of it all.

About Santa Clarita Artificial Intelligence

At Santa Clarita Artificial Intelligence, we're dedicated to helping businesses and individuals understand and leverage the transformative power of AI technology. Whether you're looking to implement AI solutions, understand the competitive landscape, or simply stay informed about the latest developments, we're here to guide you through the AI revolution.

What are your thoughts on Google's infrastructure advantage? Do you think vertical integration is the key to winning the AI race, or will more nimble, specialized players find ways to compete effectively? Let us know in the comments below.